The Modular Design of Smallest AI Atoms: Build What You Need

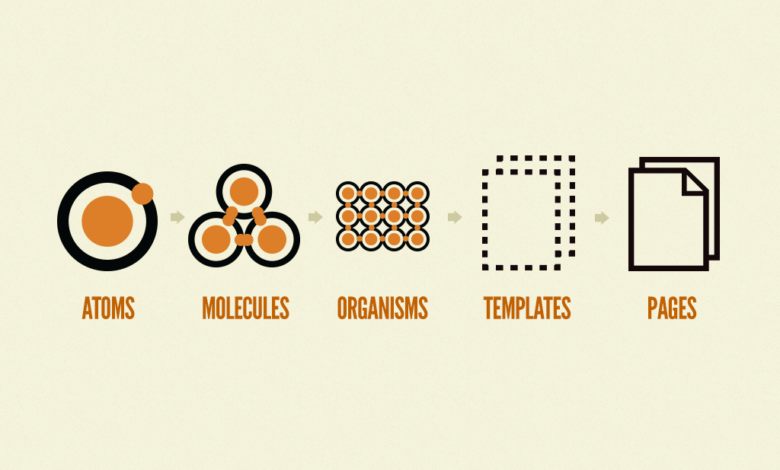

AI doesn’t need to be massive to be powerful. It just needs to be modular. In an era where agility matters more than scale, modular design reshapes how AI gets built, deployed, and scaled. Instead of one-size-fits-all models, this approach allows you to assemble only your application’s needs. The result? Faster development, tighter performance, and more innovative resource use.

In this article, we break down the core ideas behind modular AI development through the lens of the Smallest AI Atoms framework and show how it’s redefining what practical, scalable AI can look like.

Understanding Modular Design in AI

What is Modular Design?

Modular design involves constructing systems by dividing them into smaller, independent units, each with a focused purpose. These units, or “modules,” function autonomously while contributing to a cohesive whole. In the context of AI, modular design allows developers to isolate tasks, simplify system updates, and build adaptable solutions that respond quickly to real-world needs.

This approach shifts the focus from building a single, monolithic AI model to designing smaller, specialized components that work together seamlessly. It’s advantageous when AI needs to be deployed in resource-constrained environments or customized for distinct use cases.

Benefits of Modular Design

- Flexibility

Swap, upgrade, or repurpose modules without overhauling the entire system. This agility makes it easier to integrate new tools or evolve with emerging technologies. - Scalability

Add modules as your needs grow, whether expanding functionality, entering new markets, or evolving your product offerings. Each addition enhances capability without disrupting the core architecture. - Maintainability

Troubleshooting becomes more targeted and less disruptive. Problems are isolated and fixed at the module level, reducing system downtime and simplifying updates. - Cost-Effectiveness

Implement only what you need. A modular build reduces waste, lowers infrastructure costs, and allows for more strategic resource allocation, which is especially useful for startups or teams with lean budgets.

This modular thinking lays the groundwork for frameworks that are composable by design. One such example is Smallest AI Atoms.

Building Reliable AI Agents with Modular Frameworks

The Importance of Reliability

Reliability isn’t a bonus, it’s the baseline. For AI agents to deliver value, they must operate consistently across different inputs, edge cases, and environments. Modular frameworks make this possible by allowing systems to be built from stable, self-contained components that can evolve without breaking the whole.

Core Functions of AI Agents

- Perception

The agent collects information from sensors, APIs, or user inputs. It then interprets this data in context, identifying patterns, anomalies, or triggers that require a response. - Decision-Making

The agent evaluates possible actions using logic, models, or predefined rules based on perceived data. The decision process is optimized for speed, relevance, and accuracy. - Execution

After deciding on the best course of action, the agent takes initiative, whether responding to a user, automating a backend task, or directing a machine. Modular design allows this phase to integrate seamlessly with external systems.

Key Features for Reliability

To build reliable AI agents, focus on these four pillars:

- Accuracy: Ensure your AI agents provide precise results that are aligned with real-world scenarios.

- Robustness: Design agents that can handle unexpected inputs and environmental changes without failure.

- Explainability: Implement mechanisms that allow AI agents to articulate their decision-making processes, fostering trust among users.

- Fault Tolerance: Build resilience into your AI agents, enabling them to continue functioning correctly even when components fail.

This brings us to a key question: how does modularity shape how AI systems are built and scaled?

The Role of Modularity in AI Development

Enhancing Modularity

Modularity allows AI systems to evolve without requiring complete rewrites. By breaking down agents into task-specific units, each independently deployable and upgradeable, you build a framework that supports iteration, experimentation, and long-term maintenance. This separation of concerns makes your architecture more agile and resilient.

Examples of Modular AI Applications

- Chatbots: These agents feature discrete modules for natural language understanding (NLU), response generation, and system integration. Each component can be tuned or replaced independently to improve accuracy or add functionality.

- Recommendation Systems: These systems often use dedicated modules for user profiling, filtering logic, content ranking, and feedback loops. The modular approach ensures that improvements in one part don’t introduce regressions elsewhere.

Benefits of Modularity in AI

- Targeted Iteration: Update individual modules without affecting the full pipeline, speeding up experimentation and innovation.

- Faster Time to Deployment: Develop and test modules in parallel, reducing cycle time and accelerating feature delivery.

- Simplified Debugging: Localized logic makes it easier to isolate issues and deploy fixes with minimal disruption when performance dips or bugs emerge.

While modularity lays the groundwork for adaptability, scalability ensures that systems grow without breaking. That’s where the next principle comes into play.

Scalability in AI Frameworks

Understanding Scalability

Scalability separates short-term AI projects from long-term, adaptable systems. It refers to the system’s ability to grow, handling more data, users, or tasks without compromising speed or accuracy. AI must scale seamlessly alongside your needs to remain relevant as your operations expand.

Types of Scalability

- Horizontal Scalability

This involves distributing workloads across multiple servers or nodes. Think of it as adding more lanes to a highway, letting more traffic flow without causing congestion. It is helpful for real-time data pipelines or high-traffic APIs. - Vertical Scalability

This focuses on increasing the capability of existing infrastructure. By enhancing memory, CPU, or storage, the same machine can take on heavier loads; ideal for data-heavy models running in controlled environments.

Benefits of Scalability

- Operational Flexibility

Scalable AI systems flex with demand, whether a seasonal spike or a permanent uptick, without requiring a rebuild. - Strategic Cost Management

Pay-as-you-grow infrastructure means you invest in capacity as needed, avoiding costly overprovisioning. - Reliability at Scale

Performance remains consistent even as user interactions increase, ensuring trust and smooth user experiences at every growth stage.

Once scalability is baked into the system, the next step is execution, turning modular principles into functional, real-world AI agents.

Steps to Build a Modular AI Agent

Building a modular AI agent requires a structured approach, one that prioritizes clarity, reliability, and adaptability from the start. Unlike monolithic systems, modular agents thrive on clearly defined roles and streamlined communication between components. The steps below outline breaking complexity into manageable parts while keeping the system easy to scale and update.

Step 1: Define Objectives

Clearly outline the goals for your AI agent and the system requirements. Consider future use cases and user demands to ensure your design is forward-thinking.

Step 2: Choose a Modular Framework

Select a modular framework that aligns with your system requirements. If a suitable framework is unavailable, consider developing one tailored to your needs.

Step 3: Design for Scalability

Ensure that your hardware and software configurations allow horizontal and vertical scalability. This foresight will enable your system to grow seamlessly.

Step 4: Implement Testing and Monitoring

Establish a robust testing and monitoring system to track performance and identify flaws. Continuous logging will provide valuable insights into your AI agent’s functionality.

Step 5: Debug and Optimize

Debug your system regularly and optimize it based on performance data. This iterative process will help you refine your AI agent over time.

It’s helpful to break down the foundational pieces that hold the system together to understand better how these agents function at a technical level.

Key Components of Modular AI Frameworks

Before you can build effectively, you need to understand the building blocks. Each component plays a distinct role in creating AI agents that are fast, flexible, and easy to maintain.

Data Management Layer

The data management layer is the foundation of your AI agent. It handles data ingestion, preprocessing, storage, and retrieval. Modular data pipelines offer several advantages:

- Scalability: As data volume increases, you can add or modify components to accommodate growth.

- Independent Updates: Each module can be updated without disrupting the data pipeline.

- Reusability: Standard data modules can be utilized across multiple projects, reducing development time.

Model Building Layer

This layer focuses on creating AI models for specific objectives. With a modular design, you can iterate and experiment with models rapidly. Consider using pre-trained models to save time and resources.

Deployment Layer

Once your AI agent is built, it must be deployed in production environments. This layer ensures that trained models perform efficiently and reliably. Tools like Docker and Kubernetes can facilitate modular deployment, enhancing portability and resource utilization.

Once you understand how these pieces fit together, the next step is ensuring they perform consistently in the real world, regardless of changing inputs or edge conditions.

Ensuring Reliability in AI Agents

Reliability transforms AI from a clever tool into a trusted partner. In real-world environments, agents must perform consistently, not just when things go right but also when conditions shift or systems fail.

Testing and Validation

Your AI agents should undergo rigorous testing before and after deployment. Unit testing validates individual components, while integration and stress testing ensure seamless communication between modules.

Monitoring and Logging

Continuous monitoring and logging are essential for maintaining reliability—track performance metrics such as accuracy, latency, and processing time to identify areas for improvement.

Human Oversight

Incorporating human oversight into the decision-making process enhances accountability and reliability. Regular reviews of model outputs help ensure ethical considerations are addressed.

Conclusion

Smallest AI Atoms is more than a framework; it’s a practical blueprint for building AI systems that evolve with your needs. Its modular design allows you to iterate fast, deploy smarter, and scale without starting from scratch. Whether solving a narrow task or laying the groundwork for something bigger, this approach brings structure without rigidity.

By applying modular thinking, you shift from building one-off models to designing adaptable, maintainable systems. That’s where long-term value lives. With Smallest AI Atoms, you’re not just building AI; you’re building a system that can grow, adapt, and deliver in a changing environment.